Introduction

What happens when artificial intelligence becomes so powerful that it shapes decisions, behaviors, and societies — without accountability? As we move deeper into the AI-driven era, the importance of AI ethics and responsible AI development has never been more critical, especially for IT companies at the forefront of innovation. The need to ensure fairness, transparency, and accountability in AI systems is not just a regulatory requirement; it’s a moral imperative. From preventing algorithmic bias to protecting data privacy, ethical AI practices are essential for building trust and long-term sustainability in digital solutions.

At True Value Infosoft, we recognize this growing responsibility. As a leading AI app development company in India, we are deeply committed to embedding ethical standards in every phase of our AI projects — from model training to deployment. Our approach prioritizes not only cutting-edge functionality but also the societal impact of the technologies we build. We believe that responsible AI development is the key to creating solutions that empower businesses while respecting human rights and values. In this blog, we’ll explore why AI ethics is essential for IT companies in 2025, how it impacts innovation, and what strategies True Value Infosoft employs to lead with integrity in the AI revolution.

Understanding AI Ethics

AI ethics refers to the principles and guidelines that govern the development, deployment, and use of AI technologies. It addresses the moral dilemmas and societal impacts that arise when machines perform tasks traditionally done by humans.

- Fairness: AI systems must avoid discrimination and bias, ensuring equitable treatment across gender, race, ethnicity, and other dimensions.

- Transparency: Users and stakeholders should understand how AI models make decisions, promoting explainability and openness.

- Privacy: AI development must safeguard individuals’ data, ensuring confidentiality and informed consent.

- Accountability: Developers and organizations should be responsible for the outcomes of AI applications, including errors or harms caused.

- Beneficence: AI should be designed to promote well-being and prevent harm.

- Autonomy: Respecting human agency and decision-making in contexts where AI is used.

These principles provide a foundation, but practical implementation often requires balancing competing interests and navigating complex scenarios.

Why AI Ethics is Crucial in IT

1. Mitigating Bias and Discrimination

AI systems are only as good as the data they are trained on. Biased data can result in AI models that perpetuate or exacerbate social inequalities. In recruitment, for example, biased algorithms may disadvantage minority groups. Ethical AI development prioritizes bias detection and mitigation techniques, such as diverse training data and fairness-aware algorithms.

2. Enhancing Trust and Adoption

Users are more likely to adopt AI technologies they trust. Ethical considerations such as transparency and privacy assurance foster confidence. If users feel their data is handled responsibly and AI decisions are explainable, they are more inclined to engage with AI-powered systems.

3. Ensuring Compliance with Regulations

Regulatory bodies worldwide are introducing AI governance frameworks. The European Union’s AI Act and the U.S.’s Algorithmic Accountability Act are examples. Ethical AI practices help IT organizations stay compliant, avoid penalties, and maintain reputations.

4. Preventing Harm and Ensuring Safety

Unethical AI deployment can cause direct harm—such as accidents in autonomous vehicles—or indirect harm like misinformation spreading through AI-generated content. Responsible development practices incorporate safety measures and risk assessment to prevent such outcomes.

5. Driving Sustainable Innovation

Ethics encourage innovation that benefits society broadly rather than creating short-term gains at the expense of marginalized groups or the environment. Ethical AI fosters long-term, sustainable technological progress.

Key Challenges in AI Ethics

Despite growing awareness, several challenges complicate the pursuit of ethical AI:

- Data Quality and Bias: Obtaining unbiased, high-quality data is difficult due to historical and social biases embedded in datasets.

- Algorithmic Transparency: Complex AI models, especially deep learning, often operate as “black boxes,” making explainability challenging.

- Global Ethical Standards: Diverse cultural, legal, and societal norms complicate establishing universal ethical guidelines.

- Accountability Gaps: Determining who is responsible for AI decisions—developers, deployers, or users—is legally and ethically complex.

- Balancing Innovation and Regulation: Overregulation might stifle innovation, while underregulation risks harm.

Principles for Responsible AI Development

To navigate these challenges, IT organizations adopt several principles and best practices:

1. Inclusive Design

Engaging diverse stakeholders—including ethicists, affected communities, and domain experts—during AI design reduces blind spots and fosters equity.

2. Explainability and Interpretability

Designing AI systems that provide clear explanations for their decisions helps users understand and trust outcomes.

3. Robustness and Safety

Testing AI systems extensively for vulnerabilities, errors, and unintended behaviors ensures reliable operation in real-world conditions.

4. Privacy by Design

Incorporating privacy considerations from the outset through data minimization, anonymization, and secure storage protects users’ information.

5. Continuous Monitoring and Evaluation

AI models must be regularly assessed post-deployment to detect drifts in performance or ethical issues, enabling timely intervention.

6. Ethical Training and Awareness

Educating AI developers, IT staff, and leadership about ethical risks and responsibilities promotes a culture of accountability.

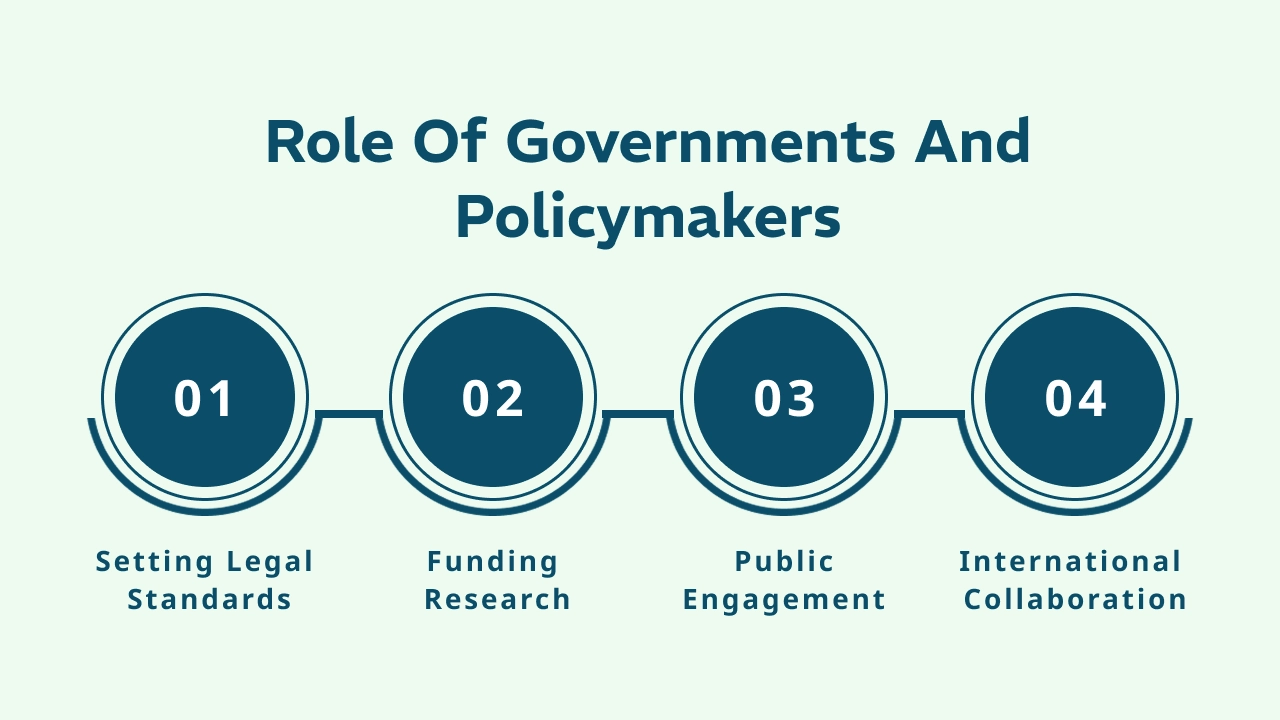

Role of Governments and Policymakers

Governments have a critical role in shaping AI ethics frameworks:

- Setting Legal Standards: Enacting laws that mandate transparency, fairness, and safety.

- Funding Research: Supporting interdisciplinary research on AI ethics and impact assessment.

- Public Engagement: Involving citizens in policymaking to align AI use with societal values.

- International Collaboration: Harmonizing regulations to address global AI challenges like cross-border data flow.

Industry Initiatives and Frameworks

Many organizations have developed AI ethics guidelines to guide responsible development:

- IEEE’s Ethically Aligned Design: Provides comprehensive recommendations for human-centric AI.

- Partnership on AI: A coalition promoting best practices and transparency.

- Google’s AI Principles: Focus on social benefit, fairness, and avoiding harm.

- Microsoft’s Responsible AI Standard: Emphasizes inclusivity, reliability, and privacy.

These frameworks serve as valuable resources for IT companies aiming to implement ethical AI.

Technologies Supporting Ethical AI

Emerging tools assist developers in ethical AI implementation:

- Bias Detection Tools: Software that identifies and mitigates biases in training data or models.

- Explainable AI (XAI): Techniques like SHAP, LIME, and attention visualization provide insights into model decisions.

- Privacy Enhancing Technologies: Differential privacy, federated learning, and homomorphic encryption help protect sensitive data.

- Audit and Compliance Platforms: Automated systems that verify adherence to ethical standards.

Case Studies Highlighting Ethical AI Practices

1. IBM Watson Health

IBM’s AI system in healthcare incorporates transparency and rigorous validation to ensure patient safety and ethical decision-making.

2. Salesforce’s Ethical AI Office

Salesforce established an AI Ethics Advisory Board to oversee responsible product development and community impact.

3. Google’s AI Fairness Initiative

Google has invested heavily in reducing bias in AI models used for image recognition and natural language processing.

The Human Element: Ethics Beyond Code

AI ethics extends beyond algorithms to organizational culture and societal impact:

- Diversity in AI Teams: Diverse teams reduce bias and broaden ethical perspectives.

- Stakeholder Engagement: Involving users and affected groups ensures AI serves real needs.

- Ethical Leadership: Leaders must champion transparency and accountability.

Why Choose True Value Infosoft as the Best IT Company in India?

Choosing the right IT partner is crucial for the success of any digital transformation or software development project. True Value Infosoft stands out as the best AI app development company in India due to its unwavering commitment to quality, innovation, and customer satisfaction. With years of industry experience, True Value Infosoft offers cutting-edge technology solutions tailored to meet the unique needs of diverse businesses worldwide. Their expert team of developers, designers, and strategists works collaboratively to deliver scalable, secure, and efficient IT services that drive growth and competitive advantage.

True Value Infosoft’s reputation is built on a foundation of trust, transparency, and ethical business practices. They prioritize understanding client requirements thoroughly and maintaining clear communication throughout the project lifecycle. This ensures timely delivery without compromising quality. Additionally, their emphasis on responsible AI development and ethical software practices sets them apart in today’s technology landscape.

Reasons to choose True Value Infosoft:

- Comprehensive Services: From AI and blockchain to mobile and web development, they cover all major IT domains.

- Skilled Professionals: Experienced team proficient in the latest technologies and methodologies.

- Customer-Centric Approach: Customized solutions with a focus on client goals and satisfaction.

- Affordable Pricing: Competitive rates without sacrificing quality.

- Strong Support: Reliable post-deployment maintenance and support.

Partner with True Value Infosoft to experience innovation driven by integrity.

Conclusion

As AI continues to embed itself into the fabric of modern life, the responsibility to develop it ethically cannot be overstated. The intersection of technology, morality, and society requires ongoing vigilance, innovation, and collaboration. For IT professionals, embracing AI ethics and responsible AI development is not just a compliance exercise but a commitment to shaping a future where technology uplifts humanity with fairness, transparency, and respect for human dignity.

FAQs

AI ethics refers to the set of moral principles guiding the development and deployment of artificial intelligence. It is important to ensure AI systems are fair, transparent, accountable, and do not harm individuals or society.

True Value Infosoft integrates ethical principles like fairness, transparency, and privacy by design into their AI development process, ensuring AI solutions are trustworthy and aligned with user values.

Key challenges include mitigating bias in data, ensuring model explainability, protecting user privacy, establishing accountability, and balancing innovation with regulation.

Ethical AI fosters user trust, compliance with regulations, safer AI deployment, and sustainable innovation, ultimately enhancing brand reputation and long-term success.

Yes, several regions like the EU and the US have started implementing AI governance frameworks and laws to promote ethical AI use, such as the EU’s AI Act and the Algorithmic Accountability Act in the US.